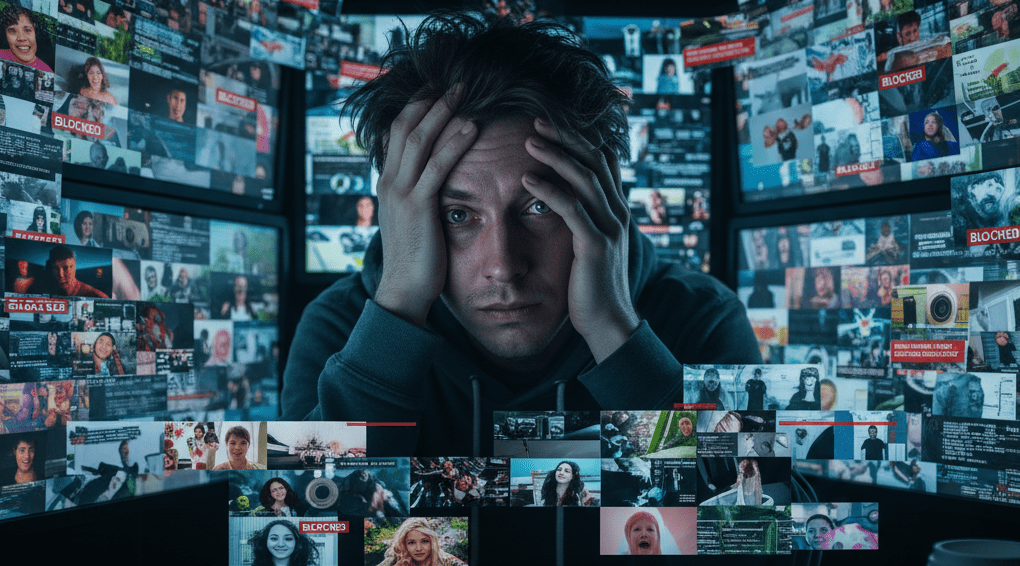

Image moderation is basically the smoke detector of your app. Most of the time it just sits there, silently judging you while doing absolutely nothing. You start thinking, “Do I even need this thing?” That is until it actually detect something dangerous then you thank all the gods that you put it there.

I’m making a platform that will let users upload an image and instead of spending weeks building my own half baked machine learning model and ending up with an “AI” that thinks bananas are adult content (because you know….), I just plugged in Google Vision API.

Why Google Vision?

Two reasons:

- It works – Google has already trained this thing on more images than I’ll ever see in my lifetime (and believe me I’ve seen a lot). It detects adult content, medical, violence, “racy” material, and even spoofs (meme like trickery). Each category comes with a confidence level (VERY_UNLIKELY -> VERY_LIKELY) that you can act upon

- It’s cheap – At the time of writing, SafeSearch Detection costs around $1.50 per 1,000 images. That’s $0.0015 per image. It even comes with free 1000 images per month. Unless you’re running a meme factory with millions of uploads per day, that’s pocket change compared to rolling your own solution.

Image Size: Bigger ≠ Better

Here’s the trick most people miss: don’t feed the API high res giant 5MB images. Processing time goes up significantly, while accuracy barely improves beyond a certain resolution.

From my own testing and Google’s own recommendations:

- Stick to images around 640px on the longest side.

- Accuracy difference between a 640px image and a full-resolution 4K upload is negligible.

- But processing time? Big difference. Smaller images get responses faster and save you some latency.

So before sending anything to Vision, I downscaled and converted it to webp for smaller file size. The API still does its job, but my endpoint stays snappy.

Minimal Working Example (Spring Boot + Java)

This is a very minmal setup. Do the import and wire up the service account yourself, I’m too lazy to spoon feed everything

// Config: GoogleVisionConfig.java

@Configuration

public class GoogleVisionConfig {

@Bean

public ImageAnnotatorClient imageAnnotatorClient() throws IOException {

return ImageAnnotatorClient.create(

ImageAnnotatorSettings.newBuilder().build()

);

}

}

// Service: ImageModerationService.java

@Service

public class ImageModerationService {

@Autowired

private ImageAnnotatorClient visionClient;

public SafeSearchAnnotation analyzeImage(String imageUrl) {

ImageSource imgSource = ImageSource.newBuilder().setImageUri(imageUrl).build();

Image image = Image.newBuilder().setSource(imgSource).build();

AnnotateImageRequest request = AnnotateImageRequest.newBuilder()

.setImage(image)

.addFeatures(Feature.newBuilder().setType(Feature.Type.SAFE_SEARCH_DETECTION))

.build();

AnnotateImageResponse response = visionClient.batchAnnotateImages(

java.util.Arrays.asList(request)

).getResponses(0);

if (response.hasError()) {

throw new RuntimeException("Vision API error: " + response.getError().getMessage());

}

return response.getSafeSearchAnnotation();

}

}

// Controller: ImageController.java

@RestController

@RequestMapping("/images")

public class ImageController {

@Autowired

private ImageModerationService moderationService;

@PostMapping("/moderate")

public String moderateImage(@RequestParam String url) {

SafeSearchAnnotation result = moderationService.analyzeImage(url);

return "Adult: " + result.getAdult() +

", Violence: " + result.getViolence() +

", Violence: " + result.getMedical() +

", Violence: " + result.getSpoof() +

", Racy: " + result.getRacy();

}

}

Send an image URL to /images/moderate?url=https://somethinghub.com/image.jpg and you’ll get back the moderation verdict.

What Google Vision Actually Detects

Google Vision’s SafeSearch feature doesn’t just say “good” or “bad.” It categorizes images with labels like:

- adult – “Is this NSFW material?”

- violence – “Is this the Battle of Helm’s Deep in HD?”

- racy – “Suggestive but not quite porn. Basically like your Instagram explore page.”

- medical – “Bloody wounds or surgical stuff.”

- spoof – “Meme content or stuff trying to trick the system.”

Each gets a probability level: VERY_UNLIKELY → VERY_LIKELY. You decide your own tolerance threshold. For me: adult, violence, and medical are the most important ones. I don’t really care about spoof and can tolerate racy to some degree.

It’s Good But Don’t Fully Trust It

As far as AI has gone, I recommend to not put your platform’s safety in the hand of AI. Every image flagged by this will be reviewed manually. It’s especially slippery on violence flag, I find the detection for violence is kinda unclear but then again even I have a hard time to determine whether an image is violent enough or not to be put on a platform.

The reverse should also hold true. There might be times where dangerous content get passed the AI moderation so I will definitely add a report function to my platform where user can flag an image and those will also be reviewed manually.

Leave a comment